One of, if not the most important, aspect of machine learning is achieving accurate and reliable predictions. One innovative approach for this goal that has gained prominence is Bootstrap Aggregating, more commonly known as bagging in machine learning. This article will discuss bagging in machine learning, compare bagging and boosting in machine learning, provide an example of a bagging classifier, go through how bagging works, and explore the advantages and drawbacks of bagging in machine learning.

What is Bagging in Machine Learning?

These two are the only relevant pictures used in popular articles, one or both can be used (one here and the other somewhere else) if we have Design make cloudzy versions of them.

What is Bagging?

Imagine you’re trying to guess the weight of an object by asking multiple people for their estimates. Individually, their guesses might vary widely, but by averaging all the estimates, you can arrive at a more reliable figure. This is the essence of bagging: combining the outputs of several models to produce a more accurate and robust prediction.

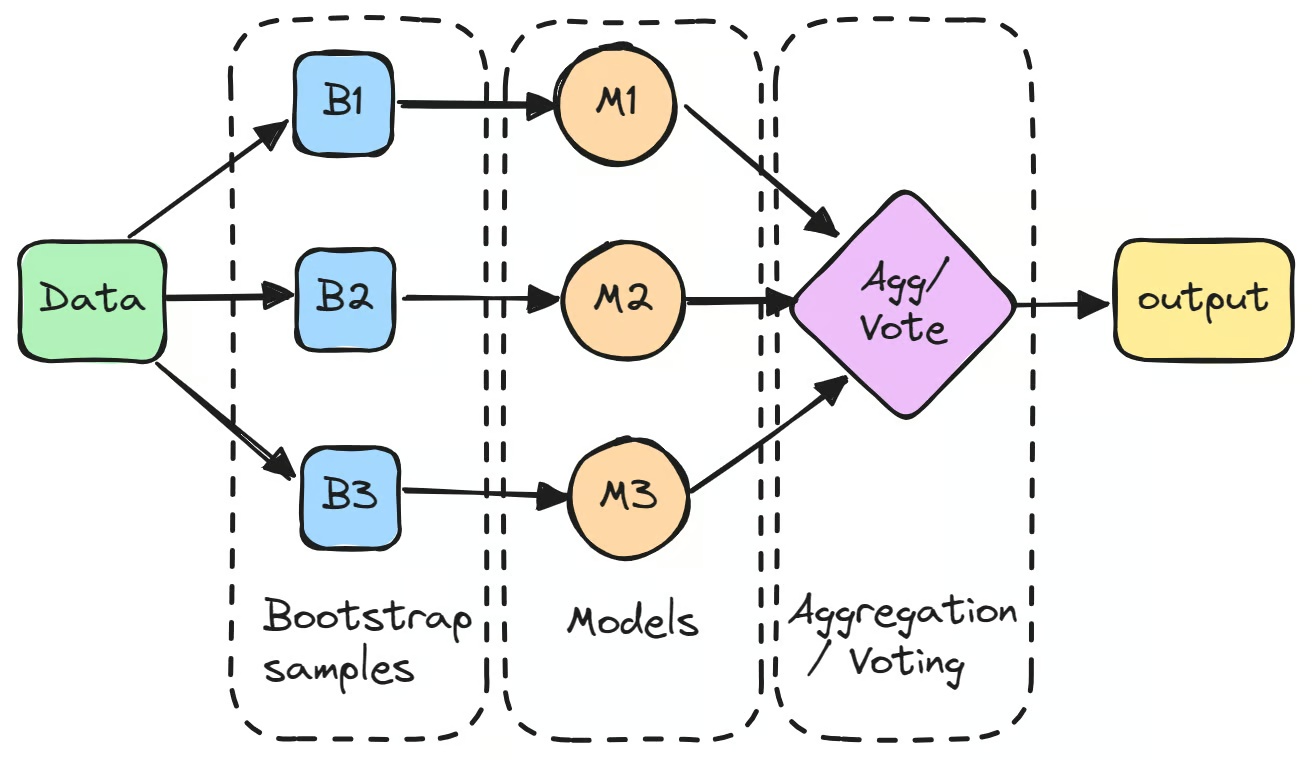

The process begins by creating multiple subsets of the original dataset through bootstrapping which is random sampling with replacement. Each subset is used to train a separate model independently.

These individual models, often referred to as “weak learners,” might not perform exceptionally well on their own due to high variance. However, when their predictions are aggregated, typically by averaging for regression tasks or majority voting for classification tasks, the combined result often surpasses the performance of any single model.

A well-known bagging classifier example is the Random Forest algorithm, which constructs an ensemble of decision trees to improve predictive performance. That said, bagging should not be confused with boosting in machine learning, which takes a different approach by training models sequentially to reduce bias, bagging works by training models in parallel to reduce variance.

Both bagging and boosting in machine learning aim to improve model performance, but they target different aspects of the model’s behavior.

Why is Bagging Useful?

One of the key advantages of bagging in machine learning is its ability to reduce variance, helping models generalize better to unseen data. Bagging is particularly beneficial when dealing with algorithms that are sensitive to fluctuations in the training data, such as decision trees.

By preventing overfitting, it ensures a more stable and reliable model. When comparing bagging and boosting in machine learning, bagging focuses on reducing variance by training multiple models in parallel, whereas boosting aims to reduce bias by training models sequentially.

An example of bagging in machine learning can be seen in financial risk prediction, where multiple decision trees are trained on different subsets of historical market data. By aggregating their predictions, bagging creates a more robust forecasting model, reducing the impact of individual model errors.

In essence, bagging in machine learning leverages the collective wisdom of multiple models to deliver predictions that are more accurate and reliable than those derived from individual models alone.

How Bagging in Machine Learning Works: Step-by-Step

To fully understand how bagging enhances model performance, let’s break down the process step-by-step.

Take Multiple Bootstrap Samples from the Dataset

The first step in bagging in machine learning is to create multiple new subsets of the original dataset using bootstrapping. This technique involves randomly sampling the data with replacement, so some data points might appear multiple times in the same subset, while others may not appear at all. This process is done to make sure that each model is trained on a slightly different version of the data.

Train a Separate Model on Each Sample

Each bootstrap sample is then used to train a separate model, typically of the same type, like decision trees. These models, often called “base learners” or “weak learners,” are trained independently on their respective subsets. A bagging classifier example is the decision tree used in the Random Forest algorithm, which forms the backbone of many bagging-based models. While each individual model might not perform well on its own, they each contribute unique insights based on their specific training data.

Aggregate the Predictions

After training the models, their predictions are aggregated to form the final output.

- For regression tasks, the predictions are averaged, reducing the model’s variance.

- For classification tasks, the final prediction is determined through majority voting, where the class predicted by most models is selected. This method provides a more stable prediction compared to a single model’s output.

Final Prediction

By combining the predictions from multiple models, bagging reduces the impact of errors from any one model, improving overall accuracy. This aggregation process is what makes bagging such a powerful technique, especially in machine learning tasks where high-variance models like decision trees are used. It effectively smooths out inconsistencies in individual model predictions, resulting in a stronger final model.

While bagging is effective for stabilizing predictions, a few things to keep in mind include the risk of overfitting if the base models are too complex, despite bagging’s general purpose of reducing it.

It’s also computationally expensive, so adjusting the number of base learners or considering more efficient ensemble methods can help, and choosing the right GPU for ML and DL is always important.

Make sure to have some model diversity among base learners for better results, and if you’re working with imbalanced data, techniques like SMOTE can be useful before applying bagging to avoid poor performance on minority classes.

Applications of Bagging

Now that we’ve explored how bagging works, it’s time to look at where it’s actually used in the real world. Bagging has found its way into a variety of industries, helping to improve the accuracy and stability of predictions in complex scenarios. Let’s take a closer look at some of the most impactful applications:

- Classification and Regression: Bagging is widely used to improve the performance of classifiers and regressors by reducing variance and preventing overfitting. For instance, Random Forests, which utilize bagging, are effective in tasks like image classification and predictive modeling.

- Anomaly Detection: In fields such as fraud detection and network intrusion detection, bagging algorithms offer superior performance by effectively identifying outliers and anomalies in data.

- Financial Risk Assessment: Bagging techniques are employed in banking to enhance credit scoring models, improving the accuracy of loan approval processes and financial risk evaluations.

- Medical Diagnostics: In healthcare, bagging has been applied to detect neurocognitive disorders like Alzheimer’s disease by analyzing MRI datasets, aiding in early diagnosis and treatment planning.

- Natural Language Processing (NLP): Bagging contributes to tasks such as text classification and sentiment analysis by aggregating predictions from multiple models, leading to more robust language understanding.

Advantages and Disadvantages of Bagging

Like any machine learning technique, bagging comes with its own set of advantages and disadvantages. Understanding these can help determine when and how to use bagging in your models.

Advantages of Bagging:

- Reduces Variance and Overfitting: One of the most significant advantages of bagging in machine learning is its ability to reduce variance, which helps prevent overfitting. By training multiple models on different subsets of the data, bagging gives you the peace of mind that the model doesn’t become too sensitive to fluctuations in the training data, resulting in a more generalizable and stable model.

- Works Well with High-Variance Models: Bagging is especially effective when used with high-variance models like decision trees. These models tend to overfit the data and have high variance, but bagging mitigates this by averaging or voting over multiple models. This helps make predictions more reliable and less likely to be swayed by noise in the data.

- Improves Model Stability and Performance: By combining multiple models trained on different subsets of the data, bagging often leads to better overall performance. It helps improve predictive accuracy while reducing the sensitivity of the model to small changes in the dataset, which ultimately makes the model more reliable.

Disadvantages of Bagging:

- Increases Computational Cost: Since bagging requires training multiple models, it naturally increases the computational cost. Training and aggregating the predictions from many models can be time-consuming, especially when using large datasets or complex models like decision trees.

- Not Effective for Low-Variance Models: While bagging is highly effective for high-variance models, it doesn’t provide much benefit when applied to low-variance models such as linear regression. In these cases, the individual models already have low error rates, so aggregating predictions does little to improve the results.

- Loss of Interpretability: With the combination of multiple models, bagging can reduce the interpretability of the final model. For example, in Random Forest, the decision-making process is based on multiple decision trees, making it harder to trace the reasoning behind a specific prediction.

When Should I Use Bagging?

Knowing when to apply bagging in machine learning projects is key to achieving optimal results. This technique works well in specific situations, but it’s not always the best choice for every problem.

When Your Model is Prone to Overfitting

One of the primary use cases for bagging is when your model is prone to overfitting, especially with high-variance models like decision trees. These models can perform well on training data but often fail to generalize to unseen data as they become too closely fitted to the specific patterns of the training set.

Bagging helps to combat this by training multiple models on different subsets of the data and averaging or voting to create a more stable prediction. This reduces the likelihood of overfitting, making the model better at handling new, unseen data.

When You Want to Improve Stability and Accuracy

If you’re looking to improve the stability and accuracy of your model without compromising too much on interpretability, bagging is an excellent choice. The aggregation of predictions from multiple models makes the final result more powerful, which is especially useful in tasks that involve noisy data.

Be it you’re tackling classification problems or regression tasks, bagging can help produce more consistent results, boosting accuracy while maintaining efficiency.

When You Have Sufficient Computational Resources

Another important factor in deciding whether to use bagging is the availability of computational resources. Since bagging requires training multiple models simultaneously, the computational cost can become significant, especially with large datasets or complex models.

If you have access to the necessary computational power, the benefits of bagging far outweigh the costs. However, if resources are limited, you might want to consider alternative techniques or limit the number of models in your ensemble.

When You’re Dealing with High-Variance Models

Bagging is particularly useful when working with models that have high variance and are sensitive to the fluctuations in the training data. Decision trees, for example, are often used with bagging in the form of Random Forests because their performance tends to vary greatly based on the training data.

By training multiple models on different data subsets and combining their predictions, bagging smooths out the variance, leading to a more reliable model.

When You Need a Robust Classifier

If you’re working on classification problems and need a robust classifier, bagging can significantly improve the stability of your predictions. For example, a Random Forest, which is a bagging classifier example, can provide a more accurate prediction by aggregating the results of many individual decision trees.

This approach works well when individual models might be weak, but their combined power results in a strong overall model.

Additionally, if you’re looking for the right platform to implement bagging techniques efficiently, tools like Databricks and Snowflake provide a unified analytics platform that can be very useful for managing large datasets and running ensemble methods like bagging.

If you’re looking for a less technical approach to machine learning, no-code AI tools could also be an option. While they don’t directly focus on advanced techniques like bagging, many no-code platforms enable users to experiment with ensemble learning methods, including bagging, without needing extensive coding skills.

This allows you to apply more sophisticated techniques and still achieve accurate predictions while focusing on model performance rather than the underlying code.

Final Thoughts

Bagging in machine learning is a powerful technique that enhances model performance by reducing variance and improving stability. By aggregating the predictions of multiple models trained on different subsets of data, bagging helps to create more accurate and reliable results. It’s especially effective for high-variance models like decision trees, where it helps prevent overfitting and ensures the model generalizes better to unseen data.

While bagging has significant advantages, such as reducing overfitting and improving accuracy, it does come with a few trade-offs. It increases computational cost due to training multiple models and may reduce interpretability. Despite these drawbacks, its ability to boost performance makes it a valuable technique in ensemble learning, alongside other methods like boosting and stacking.

Have you used bagging in machine-learning projects? Let us know your experience and how it worked for you!