Machine learning and its subcategory, deep learning, require a substantial amount of computational power that can only be provided by GPUs. However, any GPU won’t do, so here are the best GPU for machine learning, why they’re necessary, and how you can choose the right one for your project!

Why Do I Need a GPU for Machine Learning?

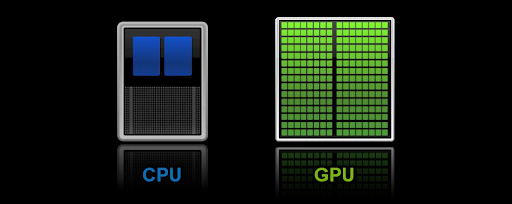

As mentioned earlier, machine learning requires a lot of power that only GPUs can provide, and while CPUs will work just fine for smaller-scale applications, anything that’s heavier than single-threaded tasks or general-purpose computing will only cause frustrations and bottlenecks. Their significant difference in computational power comes down to GPU’s parallel processing capability and the big difference in the number of cores. A typical CPU might have 4 to 16 cores, while the best GPUs for machine learning could have thousands of cores, especially tensor cores—each able to handle a small part of the computation at the same time.

This parallel processing is the key to handling matrix and linear algebra calculations much better than CPUs, which is why GPUs are so much better for tasks like training large machine-learning models. However, choosing the best GPUs for machine learning isn’t easy.

How to Choose the Best GPU for AI and DL

Now, most GPUs are powerful enough to handle typical tasks; however, machine learning and deep learning require another level of power and quality. So, the question that remains is: What makes a good GPU for deep learning?

A good GPU for deep learning should have the following qualities and features:

Cuda Cores, Tensor Cores, and Compatibility

AMD and Nvidia offer the best GPUs for machine learning and DL, with the latter being quite ahead. This is thanks to Nvidia’s Tensor and CUDA cores. Tensor cores handle calculations that are common in AI and machine learning, such as matrix multiplications and convolutions (used in deep neural networks). CUDA cores, on the other hand, allow the best GPUs for AI training to perform parallel processing by efficiently distributing operations across the GPU. GPUs without these two typically struggle with ML and DL workloads.

That said, AMD’s recent upgrades to the ROCm platform and MI-series accelerators have improved its GPUs, and you’ll see them on our list. However, Nvidia’s GPUs are still the best GPUs for deep learning due to their well-optimized software ecosystem and widespread framework support (e.g., TensorFlow, PyTorch, JAX). The best GPUs for machine learning should have high compatibility with these ML frameworks, as a mismatch can lead to inefficiencies in acceleration, driver and library support (e.g., NVIDIA’s cuDNN, TensorRT), and overall future-proof scalability.

You also might not have full access to tools provided through NVIDIA CUDA’s toolkit, such as GPU-accelerated libraries, a C and C++ compiler and runtime, and optimization and debugging tools.

VRAM (Video RAM), Memory Standard, and Memory Bandwidth

As with anything computer-related, RAM is important, and the same applies to the best GPUs for machine learning and DL. Since datasets for training machine learning models can become extremely large (up to multiple TBs for deep learning), the best GPUs for machine learning should have plenty of VRAM for quick access. This is because deep learning models need significant memory to store weights, activations, and other intermediate data during training and inference. The best GPU for AI training should also have decent memory bandwidth so that you can move around these large datasets and speed up computations.

Lastly, the memory standard is an important factor when choosing the best GPUs for deep learning. GPUs are typically GDDR (Graphics Double Data Rate) or HBM (high Bandwidth Memory). While GDDR memories offer high bandwidth for things like machine learning and gaming, the best machine learning GPUs use HBMs which have much higher bandwidth with better efficiency.

| GPU Type | VRAM Capacity | Memory Bandwidth | Memory Standard | Best For |

| Entry-level (e.g., RTX 3060, RTX 4060) | 8GB – 12GB | ~200-300 GB/s | GDDR6 | Small models, image classification, hobby projects |

| Mid-Range (e.g., RTX 3090, RTX 4090) | 24GB | ~1,000 GB/s | GDDR6X | Large datasets, deep neural networks, transformers |

| High-end AI GPUs (e.g., Nvidia A100, H100, AMD MI300X) | 40GB – 80GB | ~1,600+ GB/s | HBM2 | Large language models (LLMs), AI research, enterprise-level ML |

| Super High-end GPUs (e.g., Nvidia H100, AMD Instinct MI300X) | 80GB – 256GB | ~2,000+ GB/s | HBM3 | Large-scale AI training, supercomputing, research on massive datasets |

For those specifically working on large language models like ChatGPT, Cloudzy offers a ChatGPT-optimized VPS solution with the power needed for smooth fine-tuning and inference.

TFLOPS (Teraflops) and Floating Point Precision

Naturally, GPU performance is measured by its processing power. This depends on three factors: TFLOPS, Memory Bandwidth, and Floating-Point Precision. We’ve already discussed memory bandwidth in the best GPU for AI training; here’s what each of the other two means and why it’s important. TFLOPS, or Teraflops, is the unit that measures how fast a GPU handles complex calculations. So, rather than measuring a processor’s clock speed (how many cycles a processor completes a second), TFLOPS measures how many trillion Floating-Point Operations a GPU can perform per second. Put simply, TFLOPS tells you how powerful a GPU is at handling math-heavy tasks.

However, Floating-Point Precision, as the name suggests, shows the level of accuracy the GPU will allow the model to maintain. The best GPUs for deep learning use higher precision (e.g., FP32), which provides more accurate calculations but at a performance cost. Lower precision (e.g., FP16) speeds up processing with slightly reduced accuracy, which is often acceptable for AI and deep learning tasks.

Start Blogging

Start Blogging

Self-host your WordPress on top-tier hardware, featuring NVMe storage and minimal latency around the world — choose your favorite distro.

Get WordPress VPS| Precision | Use Case | Example Applications |

| FP32 (Single Precision) | Deep learning model training | Image recognition (ResNet, VGG) |

| TF32 (TensorFloat-32) | Mixed-precision training | NLP, recommendation systems |

| FP16 (Half Precision) | Fast inference | Autonomous driving, speech recognition, AI video enhancement |

Instead of investing heavily in physical hardware, you can instantly access Cloudzy’s Deep Learning GPU VPS, powered by RTX 4090s, optimized for machine learning and deep learning workloads.

Best GPUs for Machine Learning in 2025

Now that you have a good idea of what the best GPUs for machine learning should have, here’s our list of the best GPUs ranked by tops, memory bandwidth, VRAM, etc.

| GPU | VRAM | Memory Bandwidth | Memory Standard | TFLOPS | Floating Point Precision | Compatibility |

| NVIDIA H100 NVL | 188 GB | 7.8 TB/s | HBM3 | 3,958 | FP64, FP32, FP16 | CUDA, TensorFlow |

| NVIDIA A100 Tensor Core | 80 GB | 2 TB/s | HBM2 | 1,979 | FP64, FP32, FP16 | CUDA, TensorFlow, PyTorch |

| NVIDIA RTX 4090 | 24 GB | 1.008 TB/s | GDDR6X | 82.6 | FP32, FP16 | CUDA, TensorFlow |

| NVIDIA RTX A6000 Tensor Core | 48 GB | 768 GB/s | GDDR6 | 40 | FP64, FP32, FP16 | CUDA, TensorFlow, PyTorch |

| NVIDIA GeForce RTX 4070 | 12 GB | 504 GB/s | GDDR6X | 35.6 | FP32, FP16 | CUDA, TensorFlow |

| NVIDIA RTX 3090 Ti | 24 GB | 1.008 TB/s | GDDR6X | 40 | FP64, FP32, FP16 | CUDA, TensorFlow, PyTorch |

| AMD Radeon Instinct MI300 | 128 GB | 1.6 TB/s | HBM3 | 60 | FP64, FP32, FP16 | ROCm, TensorFlow |

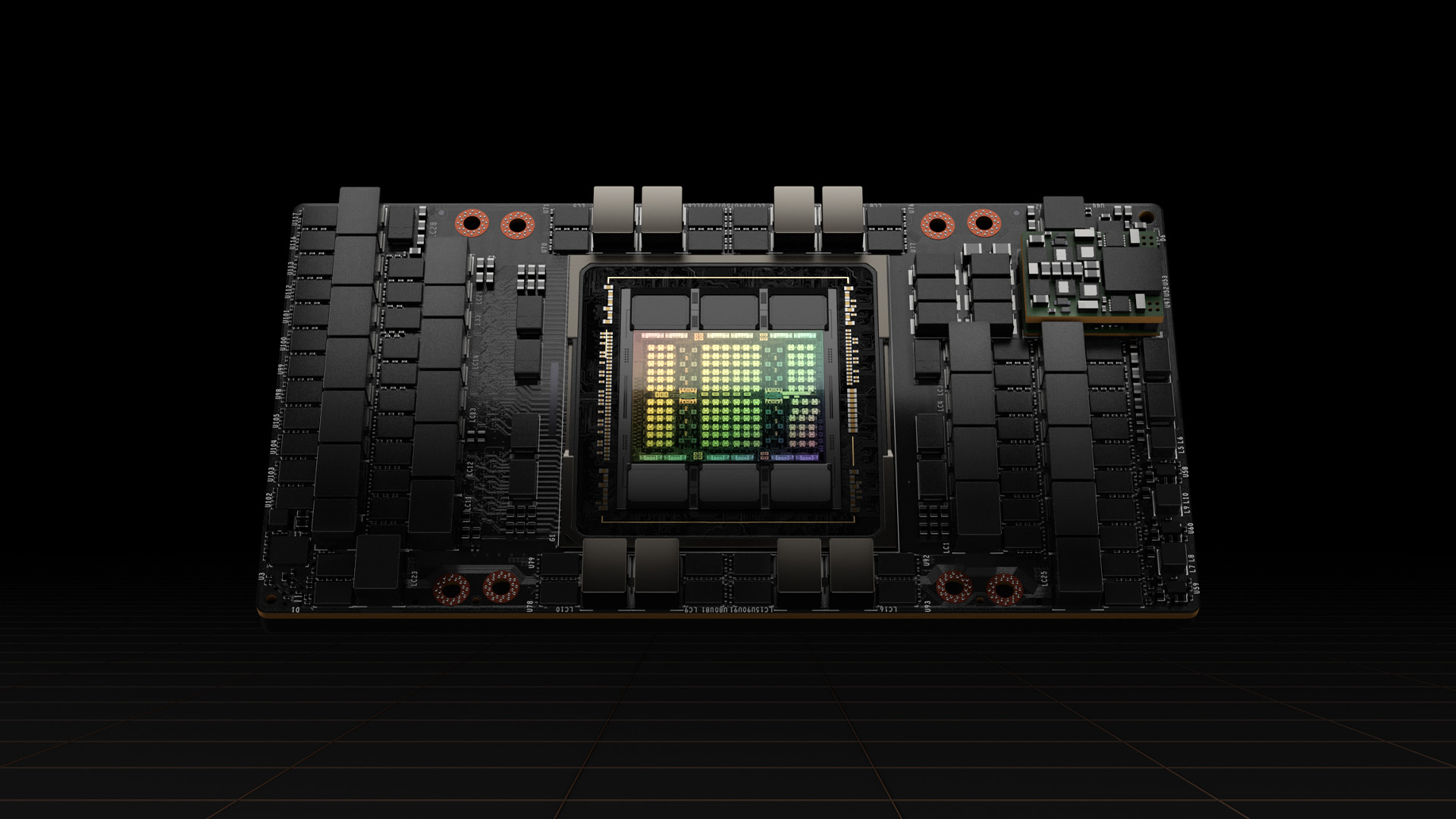

NVIDIA H100 NVL

The best machine learning GPU, the H100 NVL, offers exceptional performance for deep learning at scale, optimized for multi-tenant, high-performance workloads.

- Best For: Cutting-edge AI research, large-scale model training, and inference.

- Downside: Extremely expensive and primarily suited for enterprise-level or research environments.

NVIDIA A100 Tensor Core GPU

The A100 provides massive performance for neural networks with 80 GB of high-bandwidth memory (HBM2), suitable for heavy-duty workloads.

- Best For: Large-scale machine learning models, AI research, and cloud-based applications.

- Downside: Expensive, mostly targeted at enterprises.

NVIDIA RTX 4090

Excellent for both gaming and AI workloads, featuring 24 GB of GDDR6X memory and massive parallel computing capability.

- Best For: High-end ML tasks and AI research requiring extreme computational power.

- Downside: Power-hungry, high cost, and large size.

NVIDIA RTX A6000 Tensor Core GPU

Supports AI applications with 48 GB of GDDR6 memory, well-suited for workstations and professional creators.

- Best For: AI research, deep learning, and high-performance workloads.

- Downside: High cost, typically suited for professional environments.

NVIDIA GeForce RTX 4070

Good balance of price and performance with strong ray-tracing capabilities, featuring 12 GB of GDDR6X

- Best For: Enthusiasts and smaller businesses with medium-level machine learning needs.

- Downside: Limited VRAM for larger datasets and very large models.

NVIDIA RTX 3090 Ti

NVIDIA RTX 3090 TiHigh memory capacity (24 GB GDDR6X) and computational power, great for training medium-to-large models.

- Best For: Enthusiasts and research applications needing powerful AI processing.

- Downside: Very costly, consumes a lot of power, and can be overkill for smaller projects.

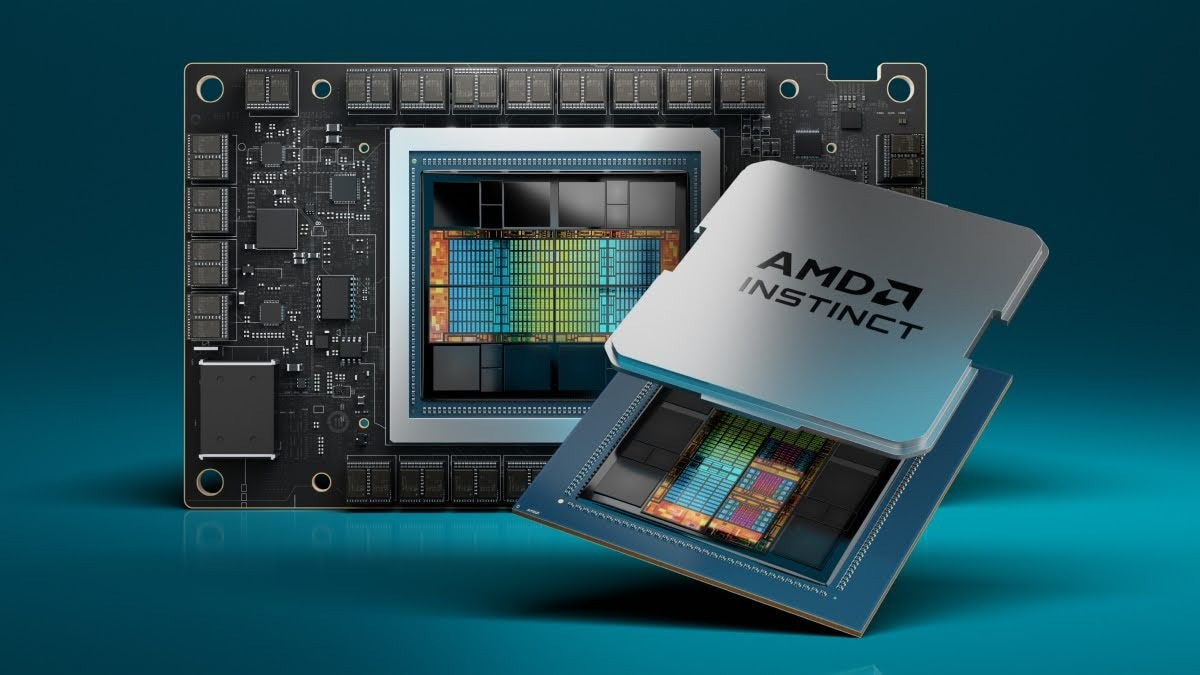

AMD Radeon Instinct MI300

Great for AI and HPC workloads, with competitive performance.

- Best For: Machine learning workloads on AMD-centric setups.

- Downside: Less established in deep learning compared to NVIDIA, fewer supported frameworks.

Cloudzy’s Cloud GPU VPS

One of the best GPUs for machine learning available today undoubtedly is the RTX 4090; however, it’s expensive, it’ll drive up your electricity bills, and its size may force you to either upgrade to a bigger computer case or modify all your parts. It’s a headache, which is why we at Cloudzy now offer an online GPU for machine learning so that you don’t have to worry about any of those issues. Our GPU VPS is equipped with up to 2 Nvidia RTX 4090 GPUs, 4 TB of NVMe SSD storage, 25 TB per second bandwidth, and 48 vCPUs!

All at affordable prices with both hourly and monthly pay-as-you-go billing available as well as a wide variety of payment options such as PayPal, Alipay, Credit Cards (via Stripe), PerfectMoney, Bitcoin, and other Cryptocurrencies.

Lastly, worst-case scenario, if you’re unhappy with our service, we offer a 14-day money-back guarantee!

Augmented Reality (AR) Cloud platforms rely heavily on high-performance GPUs to deliver real-time, immersive experiences. Just as GPUs with CUDA and Tensor cores are critical for training deep learning models, they are equally vital for rendering complex AR environments and supporting AI-driven features like object recognition and spatial mapping. At Cloudzy, our AR Cloud leverage cutting-edge GPU technology to ensure seamless performance, low latency, and scalability, making it ideal for businesses looking to deploy AR applications at scale.

Whether you’re building AI applications, training models, or conducting research, our AI VPS solutions are designed to deliver the best GPU performance at a fraction of the usual cost.

Final Thoughts

With growing computational power needs and AI models growing larger and more complex, GPUs will certainly be an integral part of our lives. So it’s best to read up on them and understand how they work and what they are.

That’s why I strongly suggest you check out Tim Dettmers’ piece on everything there is to know about GPUs and some practical advice when choosing a GPU. He is both academically honored and well–versed in deep learning.