In the ‘60s and ‘70s, monolithic architecture was favored for developing applications due to limited computing resources, which required combining all functionalities into a single, cohesive unit.

That was until the late ‘90s and 2000s, when the monolithic structure started to become too limited for the ever-growing size and complexity of applications, especially with the rise of the internet and distributed systems.

This led to the development of more modular approaches, such as service-oriented architectures (SOA) and, later, microservices architecture (MSA), which eventually became prominent in the early 2010s.

That said, this is a merely brief explanation of the basic concept and use of microservices. So, let’s discuss how microservices replaced monolithic architecture, how microservices work, and some examples of microservices. Afterward, we’ll discuss key aspects of microservices deployment and what to do if you want to deploy microservices.

What Are Microservices? How Do They Work?

As I mentioned earlier, microservices emerged as a solution to increasing application complexity and size, allowing companies to break down functions into independently deployable services.

The term “microservices” was popularized by industry experts like Martin Fowler and James Lewis, who formally introduced it in a blog post in 2014. Their work defined key principles and characteristics, including the need for independently deployable services, decentralized data management, and technology agnosticism.

Since then, microservices have become a mainstream architectural choice, supported by advancements in containerization technologies like Docker, orchestration tools like Kubernetes, and serverless computing platforms. But how do microservices work?

How Do Microservices Work?

At its core, a microservices architecture breaks down a large application into smaller, distinct services, each responsible for a specific business capability. These services communicate with each other over a network, often through REST APIs, gRPC, or message brokers like RabbitMQ or Apache Kafka.

Per Martin Fowler and James Lewis’ definition, microservices all have four key characteristics that are as follows:

- Single Responsibility: Each microservice is designed to perform a specific task or function, allowing for specialization and reducing complexity.

- Independence: Microservices can be developed, deployed, and scaled independently of one another, which provides flexibility and resilience.

- Decentralized Data Management: Microservices often have their own databases, avoiding the need for a single, centralized database.

- Technology Agnosticism: Teams can choose the best technology for each service without being bound by the choices of other services.

This approach contrasts with traditional monolithic architecture, in which all application components are tightly integrated into a single, cohesive unit.

Key Stages of Microservices Deployment

While a microservices architecture offers a myriad of benefits, such as high scalability, flexibility, efficiency, fault isolation, etc., it requires knowing how to deploy microservices effectively and a good deal of planning for it to suceed.

That’s why having a comprehensive idea of key concepts, stages, and microservices best practices in deploying microservices is essential to a successful microservices architecture. So, let’s explore the key stages of microservices deployment and what each stage entails.

Planning and Preparing for the Deployment of Microservices

All good things require planning and patience, and to deploy microservices successfully, you’ll certainly need a good deal of planning and patience. That’s why it’s important to follow microservices best practices and plan and prepare everything you need when deploying microservices.

As I mentioned earlier, one of microservices’ key principles and characteristics is the Single Responsibility Principle. By staying faithful to this principle and making sure that each microservice focuses on and is responsible for one function and capability, you allow your team to develop, deploy, and scale services independently.

Furthermore, a subcategory of this principle is the loose coupling design principle. This means that each service can function independently for communication and is minimally dependent on other services. In turn, this allows changes or updates to one service to not affect other services, allowing for independent microservices scaling.

This decreases the risk of cascading failures, where a problem or failure in one part of a system triggers a chain reaction, leading to failures throughout the system and bringing down the entire service.

One important microservice practice is having dedicated data storage for each service when deploying microservices as an extension of the loose coupling design principle, as this prevents conflicts and allows for better service scalability.

Additionally, you’ll need asynchronous microservices communication patterns, like message brokers, to ensure that every service can communicate without direct dependencies.

The final piece of the puzzle is implementing Continuous Integration and Continuous Delivery (CI/CD) pipelines for microservices. These pipelines allow teams to deploy new features or fixes through CI/CD tools like Jenkins and GitLab, allowing organizations to maintain system stability while frequently releasing new capabilities.

Now that you have an overall idea of the planning and preparation necessary for microservices deployment let’s talk about microservices deployment strategies.

Microservices Deployment Strategies

When you deploy microservices, choosing a deployment strategy depends on service function, traffic, infrastructure setup, team expertise, and cost considerations. However, generally, microservices deployment strategies are as follows:

- Service Instance per Container: In this approach, each microservice runs in its own container, offering better isolation than the multiple instances per host model. Containers facilitate easy scaling and improve resource allocation.

- Service Instance per Virtual Machine: Each service runs in a separate virtual machine (VM), providing even greater isolation than containers. While this improves security and stability, it typically incurs more overhead.

- Phased Releases: Initially, deploy microservice versions to a small subset of users, testing their stability before a full rollout. This approach minimizes impact if issues arise and allows for quick rollbacks to maintain system integrity.

- Blue-Green Deployment: This method uses two identical production environments, with one environment serving live traffic while the other is used for testing the next release. Blue-green deployment allows for easy rollbacks and zero-downtime updates, as traffic can be seamlessly switched between the two environments.

- Staged Releases: This strategy involves gradually rolling out updates to different user segments or environments. It often starts with internal environments before reaching production, limiting the blast radius of any potential issues and allowing teams to address problems in stages.

- Serverless Deployment: This approach leverages serverless platforms like AWS Fargate and Google Cloud Run, which automate infrastructure management by handling scaling and resource allocation for you. With serverless deployment, there’s no need to manage underlying servers, enabling you to focus on your microservices themselves.

Once you’ve chosen one of the above microservices to deploy microservices, you will need a microservices orchestration tool.

Microservices Orchestration

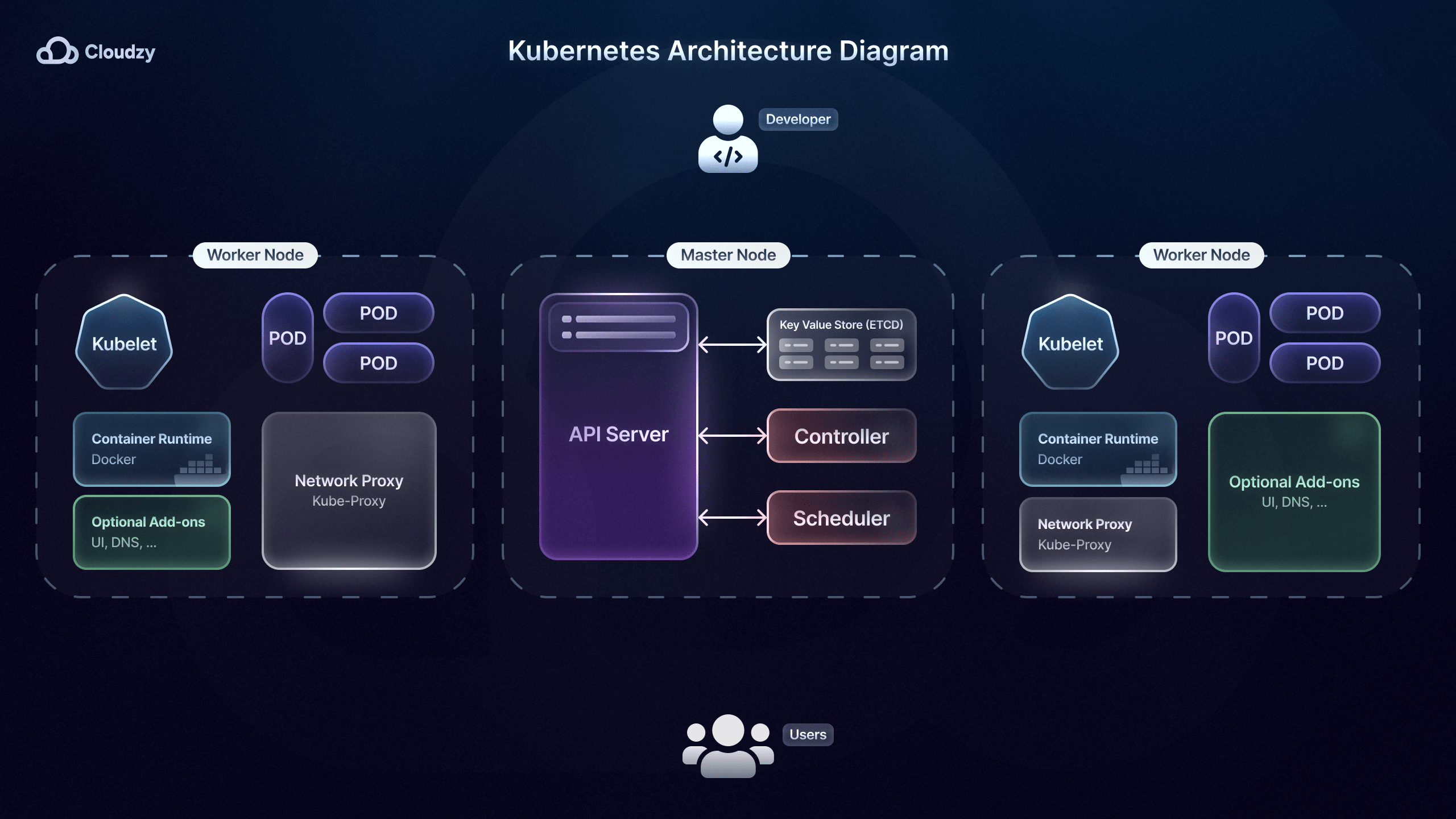

After choosing one of the many microservices deployment strategies, you’re going to need a conductor of sorts for microservice orchestration. Microservice orchestration tools, such as Kubernetes, help automate microservices deployment, microservices scaling, microservices monitoring, and management of containerized microservices.

Airbnb, for example, uses Kubernetes, allowing its engineers to deploy hundreds of changes to their microservices without manual oversight. One important feature of microservices orchestration tools like Kubernetes is the built-in load balancing.

Having a competent load-balancing feature helps distribute incoming traffic across multiple instances of a microservice. This prevents any single instance from becoming a bottleneck and enhances the system’s ability to handle spikes in demand.

Kubernetes plays a significant role in managing microservices through its self-healing capabilities, where failed containers are automatically replaced and restarted. The New York Times leverages this feature to maintain its microservices without impacting user experience and going through downtimes.

Furthermore, Kubernetes also improves microservice security as configurations and secrets, such as database credentials or API keys, using ConfigMaps and Secrets. This is especially important for companies and services, like Uber, that deal with sensitive customer and user info.

Lastly, microservices orchestration tools like Kubernetes are particularly beneficial to microservice strategies that involve rolling updates and rollbacks, such as staged releases. Rolling updates allow new microservice versions to be deployed without service interruptions by keeping some instances of the old version running.

Once you’ve set up your microservices orchestration tool, you’ll need to build and automate CI/CD pipelines for microservices deployment.

CI/CD Pipelines for Microservices Deployment

As we talked about earlier, Continuous Integration and Continuous Delivery pipelines for microservices are important aspects of microservices deployment. The CD pipelines in CI/CD pipelines are responsible for automatically deploying code changes to production as soon they pass the testing and integration stages of the CI/CD pipeline.

Then, the CD part of the CI/CD pipelines comes into play so that whenever code changes pass the testing and integration stages, the service is deployed to a microservices orchestration tool such as a Kubernetes cluster.

Furthermore, the testing and integration stages are all done automatically by the CI/CD pipelines as unit tests, integration tests, and end-to-end tests are incorporated into the pipeline.

This allows teams to validate updates at each stage while maintaining system stability. Plus, if there are any issues with code changes, despite the various testing, automated rollbacks can revert to the previous stable version.

Lastly, Implementing CI/CD pipelines for microservices according to microservices best practices helps organizations achieve faster development, reduce manual errors, and maintain high-quality standards.

Many companies like Spotify, Expedia, iRobot, Lufthansa, Pandora, etc., use CI/CD pipelines for microservices through CI/CD tools like CircleCI, AWS CodePipeline, and GitLab to automate deployment processes, ensure consistent code quality, and rapidly deliver new features while maintaining system stability.

Microservices Communication Patterns

How microservices communicate with each other is completely dependent on the function, the overall architecture, desired scalability, and the reliability of your microservices. Generally, two main kinds of microservices communication patterns are employed: synchronous and asynchronous microservices communication patterns.

In synchronous microservices communication patterns, services interact in real-time, meaning a service will send a request and wait for a response before proceeding. The most commonly used synchronous microservices communication patterns are REST (Representational State Transfer) APIs, gRPC (Google Remote Procedure Call), and GraphQL.

Typically, this kind of microservices communication patterns are used in industries and by companies that typically require real-time data processing and immediate responses. Industries like finance, healthcare, and e-commerce often use synchronous communication patterns to ensure that transactions, data retrieval, or interactions happen instantly, maintaining a smooth and responsive user experience.

That said, while synchronous microservices communication patterns offer benefits like real-time responses and simplicity, they also have certain drawbacks such as potential bottlenecks due to their tight coupling, low scalability under high loads, slow response times, and high latency during high traffic instances.

On the other hand, asynchronous microservices communication patterns are typically more suitable for microservices since they are based on the Loose Coupling principle that we discussed earlier.

This type of microservices communication pattern decouples services by allowing them to send and receive messages through a broker like Kafka or RabbitMQ. By sending messages to a queue that acts as a buffer, services communicate independently rather than waiting for a response as they would in synchronous communication patterns. This buffer enables other services to process messages at their own pace, allowing the sender to continue its work without waiting for the recipient.

Not only does the asynchronous microservices communication pattern offer a decoupled structure for microservices deployment, but it also offers the same real-time response that synchronous microservices communication patterns offer.

This is due to the event-driven architecture of the asynchronous event-driven microservices communication patterns, as services communicate by emitting events when a specific action occurs. Other services can subscribe to these events and react accordingly. This allows for highly responsive systems that react to changes in real time without direct coupling between services.

Furthermore, in asynchronous Publish-Subscribe (Pub/Sub) microservices communication patterns, the services (publishers) send messages to a topic, and other services (subscribers) listen to that topic to receive updates. This model supports multiple subscribers, simultaneously broadcasting messages to many services.

Lastly, similar to event-driven patterns, asynchronous choreography-based saga microservices communication patterns also use events to communicate with each other; however, in this pattern, a particular order is in place, meaning events trigger the next step and particular service to activate.

The difference here is that in event-driven patterns, there isn’t a certain sequence or workflow, and multiple services can react to an event rather than the specific process and order in choreography-based saga pattern.

Which type of asynchronous microservices communication pattern you use depends on the task and overall function of your microservices. Message queues such as RabbitMQ and Amazon SQS are typically used for task scheduling, workload distribution, and e-commerce for order processing and notification systems.

Event-driven message brokers, such as Apache Kafka and AWS EventBridge, are typically used for processing large-scale event streams in real-time and event routing between microservices in areas such as financial services and AWS environments.

As for Publish-Subscribe (Pub/Sub) message brokers like Google Cloud Pub/Sub and Redis Streams, these message brokers are usually used for scalable messaging across distributed systems for real-time analytics and event ingestion and real-time notifications and chat applications.

Finally, choreography-based saga message brokers are mainly used for eCommerce order processing, travel booking systems, and use cases where complex, multi-step transactions need to be coordinated across multiple services without central control.

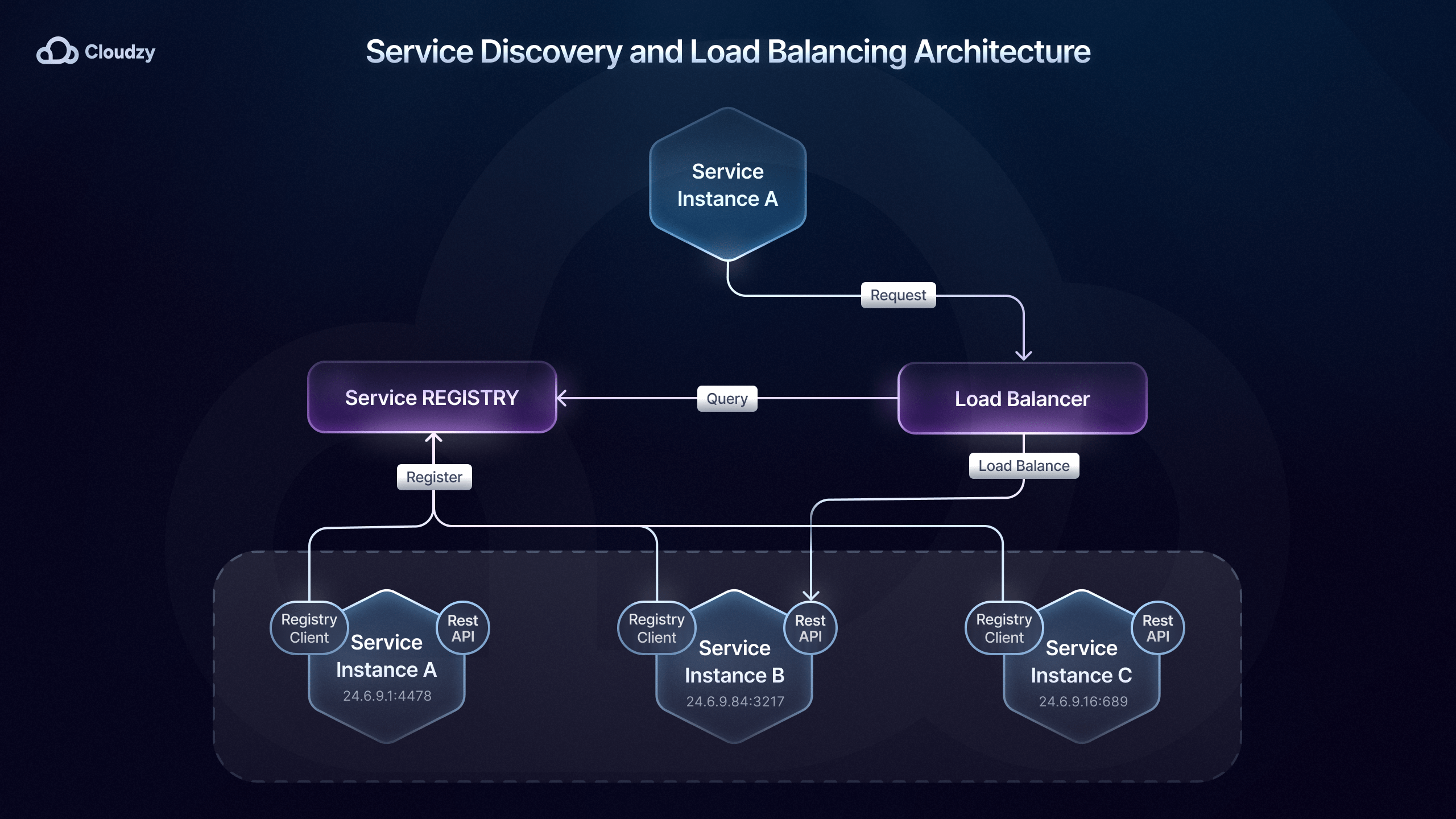

Microservice Service Discovery

Once you’ve set up and implemented a communication pattern that suits your needs, you’ll need to ensure that your services can locate each other in the first place. As I mentioned earlier, microservices orchestration tools such as Kubernetes play an important role in microservice service discovery.

This is done through the built-in service discovery that Kubernetes DNS provides, which dynamically updates IP addresses and DNS records as services scale or change location within the cluster.

This method of microservices service discovery is called server-side discovery since the routing responsibility is delegated to a load balancer, which then queries the registry and directs traffic to the appropriate instance.

On the other hand, we also have the client-side discovery method for microservice service discovery, where the service or API gateway queries a service registry such as Consul or Eureka to find available instances.

Choosing which method of service discovery is best for your microservices deployment depends on the system’s requirements and scale.

With client-side microservice service discovery, the client has full control over which instance it communicates with. This not only allows for more customization but also reduces complexity, as there’s no need for a centralized discovery service.

For instance, Netflix’s microservices deployment uses client-side microservice service discovery with Eureka and Ribbon for load balancing, allowing the client to choose the best instance based on criteria like latency and server load.

However, server-side microservice service discovery is more suitable for larger environments since a centralized service discovery can improve efficiency and allow for consistent load balancing across a distributed system.

Server-side microservice service discovery solutions such as Kubernetes, AWS Elastic Load Balancing, and API Gateways (Kong, NGINX, etc.) help route traffic efficiently and maintain high availability and are used by companies like Airbnb, Pinterest, Expedia, Lyft, etc.

Microservice Security

While monolithic architecture is mostly inferior to MSA, one aspect where monolithic architecture had the edge was security. Since microservices are built on the Loose Coupling principle and are distributed in nature, a singular, general security measure cannot be implemented.

Since each service must be secured independently, additional safeguards are necessary as the attack surface is much larger in microservices. To this end, standards such as OAuth2 and JSON Web Tokens (JWT) are commonly used for, as you might have guessed, authentication and authorization.

Additionally, an API gateway is also often employed to manage security across microservices as it enforces authentication and authorization at the entry point. Plus, gateway APIs can also implement rate limiting, logging, and monitoring, which provide additional layers of microservice security.

While these secure the main entry point, more microservice security measures are necessary to cover inter-service communication.

This is where service meshes come into play as they add a layer of network microservice security and encrypt traffic between services and enforce policies like mutual TLS. These server meshes basically set up a comprehensive end-to-end encryption that significantly improves microservice security.

Microservice Scaling

One of the biggest benefits of MSA, and the very reason it was developed to replace monolithic architecture, is its high scalability. Typically, microservices scaling can happen in two ways: vertical and horizontal.

Basically, vertical microservice scaling (scaling up) is adding more resources, such as CPU or memory, to an existing instance. Alternatively, horizontal microservice scaling (scaling out) distributes the load and increases capacity.

In terms of implementation, vertical microservice scaling is the easier of the two since all you have to do is modify a single instance by upgrading to a larger server, increasing memory or processing power in a cloud instance, or adding more storage.

This type of scaling is typically used in cases where increasing RAM or CPU power can improve query performance and data processing, such as services that are responsible for in-memory caching.

That said, while vertical microservice scaling is easier and offers an immediate performance boost, it also has drawbacks. Vertical scaling is limited by the server’s hardware capacity, so at some point, you’ll need to switch to horizontal scaling to continue vertical scaling.

Furthermore, vertical scaling has high costs as hardware and larger instances generally come with a high price tag. Lastly, If the scaled-up instance fails, the service goes down entirely, as there are no additional instances to handle the load.

For horizontal microservice scaling, rather than upgrading the resource of a single instance, you deploy new instances of that service. While these instances work independently, they still handle the same service and parts of the same workload.

Unlike vertical scaling, horizontal microservice scaling is limitless, meaning you can add as many instances as you like to handle increasing workloads and traffic spikes, offering greater scalability.

Moreover, since you have several instances, if one goes down, you’re not putting all your eggs in one basket, as other instances can continue handling requests. Lastly, horizontal scaling is much more cost-efficient in the long run, as you can use several smaller and cheaper instances to form a more reliable, more powerful performance.

That said, horizontal scaling and adding more instances require more load balancers, microservice service discovery mechanisms, and microservice orchestration tools, making your microservices architecture much more complex.

Horizontal scaling is more suited to use cases such as web services and applications such as e-commerce or social media platforms, which often experience fluctuating traffic and a high volume of requests.

That said, it’s not really a case of either or, as both types of scaling are supported in microservices and are necessary in many instances. Typically, smaller organizations use vertical scaling as it’s much simpler to implement and manage, but over time and as the application grows, horizontal scaling is introduced to handle the heavy demand.

Lastly, cloud platforms offer auto-scaling services that automatically add or remove instances based on real-time demand, which significantly helps organizations balance vertical and horizontal scaling.

Microservice Monitoring

At this stage, you’re pretty much done with your microservices deployment; all that remains is to make sure it works consistently and reliably. This is where microservice monitoring tools like Prometheus and Grafana step in.

These tools provide real-time insights into service metrics so that teams can track resource usage, latency, and error rates. Plus, these tools also offer distributed tracing (Jaeger, Zipkin, etc.), which helps visualize request flows across services and can be massively beneficial for diagnosing issues.

Lastly, since failures can cascade across services due to the distributed design of microservices, log aggregation is a critical practice in microservice monitoring. By consolidating logs into a centralized platform and setting up real-time alerts, you’ll always stay two steps ahead of issues and can proactively respond to them before they impact users.

Final Thoughts

While the world of microservices is certainly a difficult one to wrap your head around, understanding the fundamentals and key stages of microservices deployment can make the whole process much easier. Plus, as the years pass, more and more tools with significantly more features are at your disposal, making microservices deployment simpler than ever.

FAQ

What deployment strategies are commonly used for microservices?

While there are many different strategies for microservices deployment, the most commonly used deployment strategies include service instances per container, phased releases, blue-green deployment, and serverless deployment, each offering different levels of isolation, flexibility, and scalability.

What role does Kubernetes play in orchestrating microservices?

Microservices depend on microservice orchestration tools such as Kubernetes to automate the deployment, scale, and manage containerized services, providing load balancing, auto-scaling, and self-healing capabilities to ensure resilient and efficient microservices.

How can I ensure security in a microservices environment?

Due to their distributed nature, microservices are more complicated when it comes to security than monolithic architecture. Security in microservices involves authenticating and authorizing requests, encrypting inter-service communication, and implementing API gateways and service meshes like Istio for centralized security management.