A cloud server is a virtual instance running on shared hardware that delivers compute, storage, and networking resources instantly and flexibly. Explaining cloud servers shows teams how to replace lengthy hardware procurement with immediate environment spin-ups in seconds. Rather than queuing for physical racks, developers launch test, staging, or production environments with API calls or a few clicks in a management console.

When you treat servers like code encoding infrastructure configurations in version control, running automated validation, and enforcing policies before any instance boots, you gain repeatable, auditable deployments. Cloud Server Explained guides startups cutting data-center bills and enterprises managing steady workloads alongside seasonal surges.

Plus, organizations seeking cost-efficient setups often align their architecture with small business cloud computing solutions. So, by breaking free of physical hardware limits, teams unlock rapid experimentation, clear cost tracking, and smooth scaling. But what is a cloud server?

What is a Cloud Server? Unveiling the Virtualized Environment

Cloud Server Explained describes a cloud server as an isolated container or VM spun from a pool of shared hardware resources. Hypervisors like VMware ESXi, Microsoft Hyper-V, or KVM partition CPU cores, memory, and storage at the firmware level, while container engines such as Docker or containerd share the host OS kernel for lightweight isolation. Each instance behaves like a dedicated box, complete with its own CPU quota, RAM slice, and file system, yet runs on multi-tenant infrastructure.

These virtual environments support a spectrum of workloads, from simple web proxies and CMS platforms to distributed big-data analytics and machine-learning pipelines. By adjusting CPU and memory allocations in near real time, often via API calls or orchestration, command teams fine-tune performance without physically rewiring hardware. Cloud Server Explained walks you through snapshot-based backups, live cloning for testing code changes, and blue-green deployment patterns that minimize downtime.

Advanced techniques, such as nested virtualization that runs hypervisors inside VMs, create powerful labs and pre-production staging zones. NUMA-aware scheduling helps latency-sensitive applications, like in-memory caches and high-performance databases, achieve consistent throughput across complex server topologies.

How Does a Cloud Server Work? Virtualization and Resource Pooling in Action

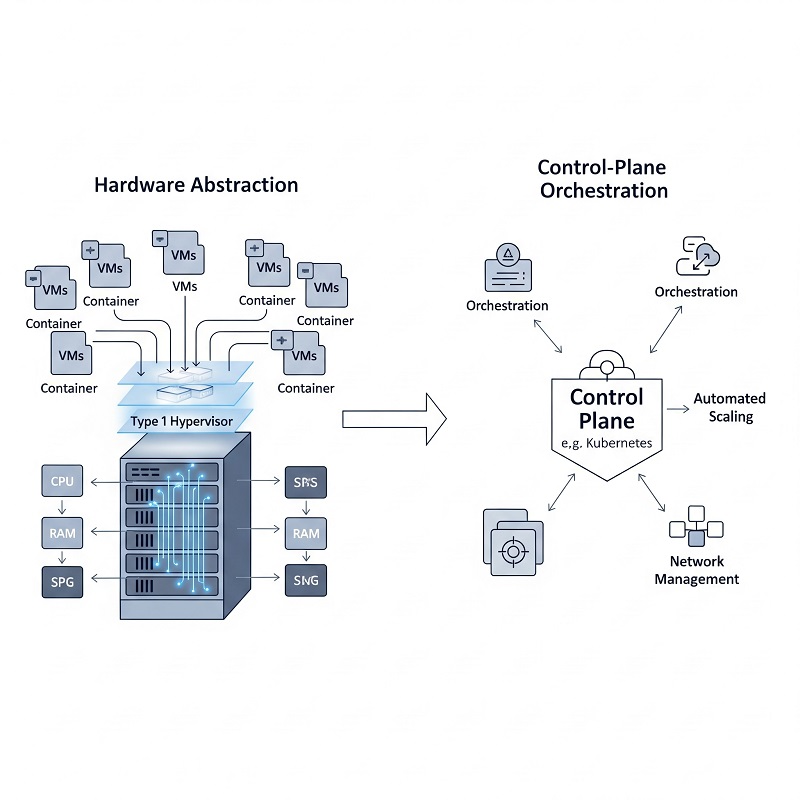

Cloud Server Explained breaks cloud server mechanics into two primary stages: hardware abstraction and control-plane orchestration. In the first stage, data centers host racks of multi-core Intel Xeon or AMD EPYC CPUs, backed by DDR4/DDR5 RAM modules, high-bandwidth NVMe SSD arrays, and redundant power and cooling systems. These components connect over ultra-low-latency network fabrics, guaranteeing rapid access to storage and compute clusters.

A Type 1 hypervisor, such as VMware ESXi, XenServer, or Microsoft Hyper-V, installs directly on this hardware, offering minimal overhead. Type 2 hypervisors (VirtualBox, Parallels) run atop an operating system, suitable for localized testing but with extra performance cost. Container runtimes share the host OS kernel, bringing applications online in milliseconds and using fewer resources than full VMs.

In the second stage, a control plane, Kubernetes for container-based workloads or OpenStack/vSphere for VM orchestration, schedules instances based on policy rules, utilization metrics, and health checks. These control-plane services handle rolling updates, automated failovers, live migrations, and horizontal scaling. Meanwhile, software-defined networks (VXLAN, Calico, or Cilium) overlay the physical switching fabric, enforcing micro-segmentation, security policies, and east-west routing without touching hardware configurations. Block-level storage volumes attach and detach on demand via APIs, and object stores serve as cost-effective off-site archives for snapshots and compliance data.

| Layer | Function | Example Technologies |

| Hardware | Aggregates CPU, RAM, storage, and network | Intel Xeon, AMD EPYC, NVMe SSD |

| Virtualization | Creates isolated VMs or containers | VMware ESXi, KVM, Docker, containerd |

| Control Plane | Automates lifecycle, scaling, and failover | Kubernetes, OpenStack, vSphere |

| Networking & Storage | Delivers dynamic networks and on-demand storage | VXLAN, Ceph, Amazon EBS, Azure Disk |

Cloud Server Explained covers performance tuning like aligning PCIe 4.0 lanes for maximum I/O bandwidth, configuring erasure coding for resilient storage, and monitoring CPU steal time and NIC queue lengths to avoid hidden slowdowns before they impact end users.

Types of Cloud Server Deployment Models: Choosing the Right Configuration

Cloud Server Explained outlines four key deployment models, each tailored to specific requirements:

| Deployment Model | Management | Scale | Isolation | Typical Use Cases |

| Public | Third-party cloud | Virtually unlimited | Logical | Development, web hosting, analytics |

| Private | On-premises or hosted | Hardware-constrained | Physical | Regulated data, high-security workloads |

| Hybrid | Mixed environments | Burst-capable | Mixed | Seasonal peaks, phased migrations |

| Multi-Cloud | Multiple platforms | Regionally bound | Varies | Disaster recovery, vendor flexibility |

Factors to Consider When Choosing a Cloud Server Solution

Before selecting a cloud server provider, map your needs against a comprehensive checklist. Cloud Server Explained uses these factors to uncover hidden risks and costs:

- Compute Performance: Compare vCPU clock speeds, core counts, cache sizes, and virtualization overhead.

- Memory & Storage: Review RAM ceilings, SSD IOPS, throughput, backup frequency, and archival tiers.

- Network Features: Examine baseline and burst bandwidth, private VLANs, DDoS mitigation, load-balancer integrations, and cross-region peering.

- Security & Compliance: Confirm data encryption at rest and in transit, tenant isolation proofs, key-management services, and audit logging; look for certifications like ISO 27001, GDPR, and HIPAA.

- Reliability & SLAs: Scrutinize uptime guarantees, support channels (email, chat, phone), on-call coverage, and incident response times.

- Cost Structures: Analyze pay-as-you-go rates, reserved discounts, spot/preemptible rates, data egress fees, and tiered pricing models.

- Integration & Toolchain Support: Make sure of native or plugin support for Terraform, Ansible, Chef, Puppet, Helm, and directory services (LDAP, SAML, OAuth).

- Global Footprint: Choose data center regions close to your end users to minimize latency and comply with data-residency laws.

Running a pilot migration, including failover drills and invoice reconciliation, exposes unexpected metadata charges, configuration gaps, and oversights. Cloud Server Explained pairs each criterion with migration case studies from e-commerce, healthcare, finance, and government sectors.

Examples of Cloud Servers: From Global Giants to Specialized Providers

Cloud Server Explained lines up major players and niche specialists side by side for easy comparison:

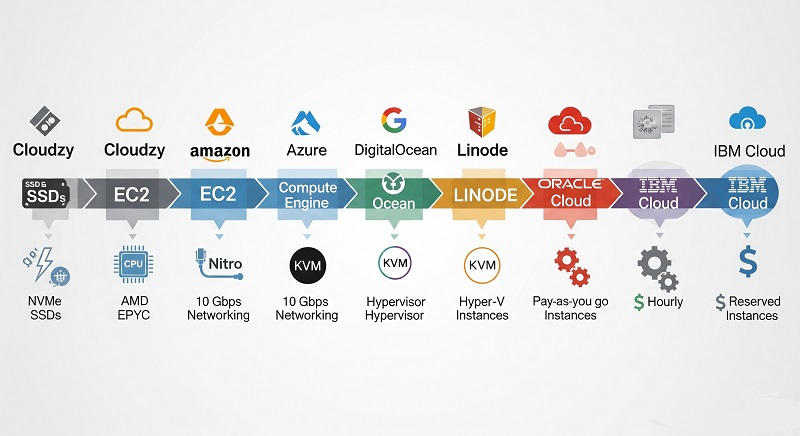

- Cloudzy Virtual Servers: NVMe SSD, AMD EPYC CPUs, 10 Gbps ports, flexible billing (pay-as-you-go, crypto), private VLANs, built-in DDoS mitigation.

- Amazon EC2 Nitro hypervisor, diverse instance families, strong serverless and container integrations (Lambda, ECS, EKS).

- Azure Virtual Machines: ExpressRoute private connections, deep Microsoft interoperability, and hybrid migration tools.

- Google Compute Engine: Custom CPU/memory shapes, live VM migration, premium networking SLAs.

- DigitalOcean Droplets: Flat-rate pricing, intuitive UI, extensive community documentation.

- Linode Instances: Budget-friendly bundles, standard profiles, and included DDoS protection.

- Oracle Cloud VMs: Bare-metal and VM options, integrated Oracle Database services.

- IBM Cloud VMs: KVM and PowerVM support, hardware security modules, and compliance-ready.

Regional champions like Alibaba ECS and OVH Cloud target localized needs. Alibaba’s Asia-Pacific footprint solves residency requirements, and OVH’s anti-DDoS network defends real-time streaming and gaming workloads.

Cloudzy VPS: Your High-Performance Cloud Server Solution

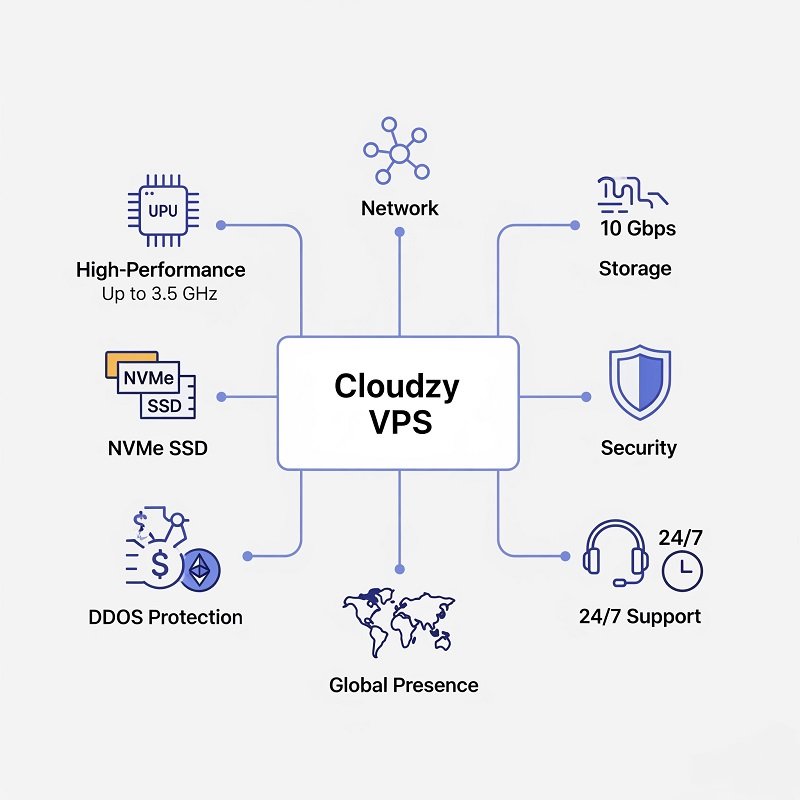

Cloudzy’s VPS lineup merges enterprise features with simplicity for demanding workloads:

- 32 vCPUs & 128 GB RAM slices on AMD EPYC-based hardware.

- 10 Gbps network with burst options and VLAN segmentation for secure traffic flows.

- NVMe SSD storage offering sub-millisecond latency, ideal for transactional databases and real-time analytics.

- Automated daily snapshots are retained for seven days, plus optional cross-region replication.

- Flexible billing, hourly or monthly in USD, EUR, GBP, or crypto, with instant invoicing and no minimum contracts.

- Built-in DDoS protection for cloud server security and up to 10 Tbps at the network edge.

- Global presence across North America, Europe, and Asia-Pacific data centers.

- 24/7 expert support with guaranteed sub-15-minute response times.

Cloudzy combines private–cloud–level isolation, custom firewall rules, VLANs, and encrypted volumes with the deployment speed of public clouds. Cloud Server Explained shows how to plug Cloudzy VPS into Kubernetes clusters, Terraform scripts, or any modern CI/CD pipeline for complete infrastructure-as-code workflows.

Conclusion: Choosing the Right Cloud Server for Your Needs

Cloud Server Explained delivers a clear roadmap: align your application characteristics, traffic patterns, compliance needs, and budget metrics with the right server model. Public servers enable rapid development cycles and global reach, while private or hybrid servers protect sensitive data and steady workloads. Cloudzy VPS offers a perfect midpoint: high-performance specs, transparent billing, and worldwide presence without the burden of hardware management.

As requirements evolve, revisit your evaluation factors and pilot new configurations. Use the Cloud Server Explained framework to guide architecture reviews, migrations, and capacity planning, making sure your infrastructure remains lean, resilient, and responsive to user demands.